mirror of

https://github.com/ivuorinen/docker-elk.git

synced 2026-01-26 03:34:01 +00:00

Initial commit

This commit is contained in:

43

.env

Normal file

43

.env

Normal file

@@ -0,0 +1,43 @@

|

||||

COMPOSE_PROJECT_NAME=elastic

|

||||

ELK_VERSION=8.10.2

|

||||

|

||||

#----------- Resources --------------------------#

|

||||

ELASTICSEARCH_HEAP=1024m

|

||||

LOGSTASH_HEAP=512m

|

||||

|

||||

#----------- Hosts and Ports --------------------#

|

||||

# To be able to further "de-compose" the compose files, get hostnames from environment variables instead.

|

||||

|

||||

ELASTICSEARCH_HOST=elasticsearch

|

||||

ELASTICSEARCH_PORT=9200

|

||||

|

||||

KIBANA_HOST=kibana

|

||||

KIBANA_PORT=5601

|

||||

|

||||

LOGSTASH_HOST=logstash

|

||||

|

||||

APMSERVER_HOST=apm-server

|

||||

APMSERVER_PORT=8200

|

||||

|

||||

#----------- Credientals ------------------------#

|

||||

# Username & Password for Admin Elasticsearch cluster.

|

||||

# This is used to set the password at setup, and used by others to connect to Elasticsearch at runtime.

|

||||

# USERNAME cannot be changed! It is set here for parmeterization only.

|

||||

ELASTIC_USERNAME=elastic

|

||||

ELASTIC_PASSWORD=changeme

|

||||

AWS_ACCESS_KEY_ID=nottherealid

|

||||

AWS_SECRET_ACCESS_KEY=notherealsecret

|

||||

ELASTIC_APM_SECRET_TOKEN=secrettokengoeshere

|

||||

|

||||

#----------- Cluster ----------------------------#

|

||||

ELASTIC_CLUSTER_NAME=elastdocker-cluster

|

||||

ELASTIC_INIT_MASTER_NODE=elastdocker-node-0

|

||||

ELASTIC_NODE_NAME=elastdocker-node-0

|

||||

|

||||

# Hostnames of master eligble elasticsearch instances. (matches compose generated host name)

|

||||

ELASTIC_DISCOVERY_SEEDS=elasticsearch

|

||||

|

||||

#----------- For Multinode Cluster --------------#

|

||||

# Other nodes

|

||||

ELASTIC_NODE_NAME_1=elastdocker-node-1

|

||||

ELASTIC_NODE_NAME_2=elastdocker-node-2

|

||||

5

.gitattributes

vendored

Normal file

5

.gitattributes

vendored

Normal file

@@ -0,0 +1,5 @@

|

||||

# Declare files that will always have LF line endings on checkout.

|

||||

docker-healthcheck text eol=lf

|

||||

*.sh text eol=lf

|

||||

setup/*.sh linguist-language=Dockerfile

|

||||

Makefile linguist-vendored

|

||||

76

.github/CODE_OF_CONDUCT.md

vendored

Normal file

76

.github/CODE_OF_CONDUCT.md

vendored

Normal file

@@ -0,0 +1,76 @@

|

||||

# Contributor Covenant Code of Conduct

|

||||

|

||||

## Our Pledge

|

||||

|

||||

In the interest of fostering an open and welcoming environment, we as

|

||||

contributors and maintainers pledge to making participation in our project and

|

||||

our community a harassment-free experience for everyone, regardless of age, body

|

||||

size, disability, ethnicity, sex characteristics, gender identity and expression,

|

||||

level of experience, education, socio-economic status, nationality, personal

|

||||

appearance, race, religion, or sexual identity and orientation.

|

||||

|

||||

## Our Standards

|

||||

|

||||

Examples of behavior that contributes to creating a positive environment

|

||||

include:

|

||||

|

||||

* Using welcoming and inclusive language

|

||||

* Being respectful of differing viewpoints and experiences

|

||||

* Gracefully accepting constructive criticism

|

||||

* Focusing on what is best for the community

|

||||

* Showing empathy towards other community members

|

||||

|

||||

Examples of unacceptable behavior by participants include:

|

||||

|

||||

* The use of sexualized language or imagery and unwelcome sexual attention or

|

||||

advances

|

||||

* Trolling, insulting/derogatory comments, and personal or political attacks

|

||||

* Public or private harassment

|

||||

* Publishing others' private information, such as a physical or electronic

|

||||

address, without explicit permission

|

||||

* Other conduct which could reasonably be considered inappropriate in a

|

||||

professional setting

|

||||

|

||||

## Our Responsibilities

|

||||

|

||||

Project maintainers are responsible for clarifying the standards of acceptable

|

||||

behavior and are expected to take appropriate and fair corrective action in

|

||||

response to any instances of unacceptable behavior.

|

||||

|

||||

Project maintainers have the right and responsibility to remove, edit, or

|

||||

reject comments, commits, code, wiki edits, issues, and other contributions

|

||||

that are not aligned to this Code of Conduct, or to ban temporarily or

|

||||

permanently any contributor for other behaviors that they deem inappropriate,

|

||||

threatening, offensive, or harmful.

|

||||

|

||||

## Scope

|

||||

|

||||

This Code of Conduct applies both within project spaces and in public spaces

|

||||

when an individual is representing the project or its community. Examples of

|

||||

representing a project or community include using an official project e-mail

|

||||

address, posting via an official social media account, or acting as an appointed

|

||||

representative at an online or offline event. Representation of a project may be

|

||||

further defined and clarified by project maintainers.

|

||||

|

||||

## Enforcement

|

||||

|

||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

||||

reported by contacting the project team at sherifabdlnaby@gmail.com. All

|

||||

complaints will be reviewed and investigated and will result in a response that

|

||||

is deemed necessary and appropriate to the circumstances. The project team is

|

||||

obligated to maintain confidentiality with regard to the reporter of an incident.

|

||||

Further details of specific enforcement policies may be posted separately.

|

||||

|

||||

Project maintainers who do not follow or enforce the Code of Conduct in good

|

||||

faith may face temporary or permanent repercussions as determined by other

|

||||

members of the project's leadership.

|

||||

|

||||

## Attribution

|

||||

|

||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

|

||||

available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html

|

||||

|

||||

[homepage]: https://www.contributor-covenant.org

|

||||

|

||||

For answers to common questions about this code of conduct, see

|

||||

https://www.contributor-covenant.org/faq

|

||||

77

.github/CONTRIBUTING.md

vendored

Normal file

77

.github/CONTRIBUTING.md

vendored

Normal file

@@ -0,0 +1,77 @@

|

||||

|

||||

# Contributor Covenant Code of Conduct

|

||||

|

||||

## Our Pledge

|

||||

|

||||

In the interest of fostering an open and welcoming environment, we as

|

||||

contributors and maintainers pledge to make participation in our project and

|

||||

our community a harassment-free experience for everyone, regardless of age, body

|

||||

size, disability, ethnicity, sex characteristics, gender identity and expression,

|

||||

level of experience, education, socio-economic status, nationality, personal

|

||||

appearance, race, religion, or sexual identity and orientation.

|

||||

|

||||

## Our Standards

|

||||

|

||||

Examples of behavior that contributes to creating a positive environment

|

||||

include:

|

||||

|

||||

* Using welcoming and inclusive language

|

||||

* Being respectful of differing viewpoints and experiences

|

||||

* Gracefully accepting constructive criticism

|

||||

* Focusing on what is best for the community

|

||||

* Showing empathy towards other community members

|

||||

|

||||

Examples of unacceptable behavior by participants include:

|

||||

|

||||

* The use of sexualized language or imagery and unwelcome sexual attention or

|

||||

advances

|

||||

* Trolling, insulting/derogatory comments, and personal or political attacks

|

||||

* Public or private harassment

|

||||

* Publishing others' private information, such as a physical or electronic

|

||||

address, without explicit permission

|

||||

* Other conduct which could reasonably be considered inappropriate in a

|

||||

professional setting

|

||||

|

||||

## Our Responsibilities

|

||||

|

||||

Project maintainers are responsible for clarifying the standards of acceptable

|

||||

behavior and are expected to take appropriate and fair corrective action in

|

||||

response to any instances of unacceptable behavior.

|

||||

|

||||

Project maintainers have the right and responsibility to remove, edit, or

|

||||

reject comments, commits, code, wiki edits, issues, and other contributions

|

||||

that are not aligned to this Code of Conduct, or to ban temporarily or

|

||||

permanently any contributor for other behaviors that they deem inappropriate,

|

||||

threatening, offensive, or harmful.

|

||||

|

||||

## Scope

|

||||

|

||||

This Code of Conduct applies within all project spaces, and it also applies when

|

||||

an individual is representing the project or its community in public spaces.

|

||||

Examples of representing a project or community include using an official

|

||||

project e-mail address, posting via an official social media account, or acting

|

||||

as an appointed representative at an online or offline event. Representation of

|

||||

a project may be further defined and clarified by project maintainers.

|

||||

|

||||

## Enforcement

|

||||

|

||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

||||

reported by contacting the project team at [INSERT EMAIL ADDRESS]. All

|

||||

complaints will be reviewed and investigated and will result in a response that

|

||||

is deemed necessary and appropriate to the circumstances. The project team is

|

||||

obligated to maintain confidentiality with regard to the reporter of an incident.

|

||||

Further details of specific enforcement policies may be posted separately.

|

||||

|

||||

Project maintainers who do not follow or enforce the Code of Conduct in good

|

||||

faith may face temporary or permanent repercussions as determined by other

|

||||

members of the project's leadership.

|

||||

|

||||

## Attribution

|

||||

|

||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

|

||||

available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html

|

||||

|

||||

[homepage]: https://www.contributor-covenant.org

|

||||

|

||||

For answers to common questions about this code of conduct, see

|

||||

https://www.contributor-covenant.org/faq

|

||||

12

.github/FUNDING.yml

vendored

Normal file

12

.github/FUNDING.yml

vendored

Normal file

@@ -0,0 +1,12 @@

|

||||

# These are supported funding model platforms

|

||||

|

||||

github: sherifabdlnaby

|

||||

patreon: # Replace with a single Patreon username

|

||||

open_collective: # Replace with a single Open Collective username

|

||||

ko_fi: # Replace with a single Ko-fi username

|

||||

tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

|

||||

community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

|

||||

liberapay: # Replace with a single Liberapay username

|

||||

issuehunt: # Replace with a single IssueHunt username

|

||||

otechie: # Replace with a single Otechie username

|

||||

custom: # Replace with up to 4 custom sponsorship URLs e.g., ['link1', 'link2']

|

||||

38

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

38

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,38 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: 'bug'

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

**To Reproduce**

|

||||

Steps to reproduce the behavior:

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

**Screenshots**

|

||||

If applicable, add screenshots to help explain your problem.

|

||||

|

||||

**Desktop (please complete the following information):**

|

||||

- OS: [e.g. iOS]

|

||||

- Browser [e.g. chrome, safari]

|

||||

- Version [e.g. 22]

|

||||

|

||||

**Smartphone (please complete the following information):**

|

||||

- Device: [e.g. iPhone6]

|

||||

- OS: [e.g. iOS8.1]

|

||||

- Browser [e.g. stock browser, safari]

|

||||

- Version [e.g. 22]

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

20

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

20

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

@@ -0,0 +1,20 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: 'feature request'

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

**Describe the solution you'd like**

|

||||

A clear and concise description of what you want to happen.

|

||||

|

||||

**Describe alternatives you've considered**

|

||||

A clear and concise description of any alternative solutions or features you've considered.

|

||||

|

||||

**Additional context**

|

||||

Add any other context or screenshots about the feature request here.

|

||||

11

.github/ISSUE_TEMPLATE/question.md

vendored

Normal file

11

.github/ISSUE_TEMPLATE/question.md

vendored

Normal file

@@ -0,0 +1,11 @@

|

||||

---

|

||||

name: Question

|

||||

about: Ask a Question

|

||||

title: ''

|

||||

labels: 'question'

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

Ask a question...

|

||||

|

||||

22

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

22

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

@@ -0,0 +1,22 @@

|

||||

(Thanks for sending a pull request! Please make sure you click the link above to view the contribution guidelines, then fill out the blanks below.)

|

||||

|

||||

What does this implement/fix? Explain your changes.

|

||||

---------------------------------------------------

|

||||

…

|

||||

|

||||

Does this close any currently open issues?

|

||||

------------------------------------------

|

||||

…

|

||||

|

||||

|

||||

Any relevant logs, error output, etc?

|

||||

-------------------------------------

|

||||

(If it’s long, please paste to https://ghostbin.com/ and insert the link here.)

|

||||

|

||||

Any other comments?

|

||||

-------------------

|

||||

…

|

||||

|

||||

Where has this been tested?

|

||||

---------------------------

|

||||

…

|

||||

21

.github/SECURITY.md

vendored

Normal file

21

.github/SECURITY.md

vendored

Normal file

@@ -0,0 +1,21 @@

|

||||

# Security Policy

|

||||

|

||||

## Supported Versions

|

||||

|

||||

Use this section to tell people about which versions of your project are

|

||||

currently being supported with security updates.

|

||||

|

||||

| Version | Supported |

|

||||

| ------- | ------------------ |

|

||||

| 5.1.x | :white_check_mark: |

|

||||

| 5.0.x | :x: |

|

||||

| 4.0.x | :white_check_mark: |

|

||||

| < 4.0 | :x: |

|

||||

|

||||

## Reporting a Vulnerability

|

||||

|

||||

Use this section to tell people how to report a vulnerability.

|

||||

|

||||

Tell them where to go, how often they can expect to get an update on a

|

||||

reported vulnerability, what to expect if the vulnerability is accepted or

|

||||

declined, etc.

|

||||

79

.github/auto-release.yml

vendored

Normal file

79

.github/auto-release.yml

vendored

Normal file

@@ -0,0 +1,79 @@

|

||||

name-template: 'v$RESOLVED_VERSION 🚀'

|

||||

tag-template: 'v$RESOLVED_VERSION'

|

||||

version-template: '$MAJOR.$MINOR.$PATCH'

|

||||

version-resolver:

|

||||

major:

|

||||

labels:

|

||||

- 'major'

|

||||

minor:

|

||||

labels:

|

||||

- 'minor'

|

||||

- 'enhancement'

|

||||

- 'feature'

|

||||

- 'dependency-update'

|

||||

patch:

|

||||

labels:

|

||||

- 'auto-update'

|

||||

- 'patch'

|

||||

- 'fix'

|

||||

- 'chore'

|

||||

- 'bugfix'

|

||||

- 'bug'

|

||||

- 'hotfix'

|

||||

default: 'patch'

|

||||

|

||||

categories:

|

||||

- title: '🚀 Enhancements'

|

||||

labels:

|

||||

- 'enhancement'

|

||||

- 'feature'

|

||||

- 'patch'

|

||||

- title: '⬆️ Upgrades'

|

||||

labels:

|

||||

- 'upgrades'

|

||||

- title: '🐛 Bug Fixes'

|

||||

labels:

|

||||

- 'fix'

|

||||

- 'bugfix'

|

||||

- 'bug'

|

||||

- 'hotfix'

|

||||

- title: '🤖 Automatic Updates'

|

||||

labels:

|

||||

- 'auto-update'

|

||||

- title: '📝 Documentation'

|

||||

labels:

|

||||

- 'docs'

|

||||

|

||||

autolabeler:

|

||||

- label: 'docs'

|

||||

files:

|

||||

- '*.md'

|

||||

- label: 'enhancement'

|

||||

title: '/enhancement|fixes/i'

|

||||

|

||||

- label: 'upgrades'

|

||||

title: '/⬆️/i'

|

||||

|

||||

- label: 'bugfix'

|

||||

title: '/bugfix/i'

|

||||

|

||||

- label: 'bug'

|

||||

title: '/🐛|🐞|bug/i'

|

||||

|

||||

- label: 'auto-update'

|

||||

title: '/🤖/i'

|

||||

|

||||

- label: 'feature'

|

||||

title: '/🚀|🎉/i'

|

||||

|

||||

change-template: |

|

||||

<details>

|

||||

<summary>$TITLE @$AUTHOR (#$NUMBER)</summary>

|

||||

|

||||

$BODY

|

||||

</details>

|

||||

|

||||

template: |

|

||||

## Changes

|

||||

|

||||

$CHANGES

|

||||

27

.github/workflows/auto-release.yml

vendored

Normal file

27

.github/workflows/auto-release.yml

vendored

Normal file

@@ -0,0 +1,27 @@

|

||||

name: auto-release

|

||||

|

||||

on:

|

||||

push:

|

||||

# branches to consider in the event; optional, defaults to all

|

||||

branches:

|

||||

- main

|

||||

# pull_request event is required only for autolabeler

|

||||

pull_request:

|

||||

# Only following types are handled by the action, but one can default to all as well

|

||||

types: [ opened, reopened, synchronize ]

|

||||

|

||||

jobs:

|

||||

publish:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

# Drafts your next Release notes as Pull Requests are merged into "main"

|

||||

- uses: release-drafter/release-drafter@v5

|

||||

with:

|

||||

publish: false

|

||||

prerelease: true

|

||||

config-name: auto-release.yml

|

||||

# allows autolabeler to run without unmerged PRs from being added to draft

|

||||

disable-releaser: ${{ github.ref_name != 'main' }}

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

33

.github/workflows/build.yml

vendored

Normal file

33

.github/workflows/build.yml

vendored

Normal file

@@ -0,0 +1,33 @@

|

||||

# This workflow uses actions that are not certified by GitHub.

|

||||

# They are provided by a third-party and are governed by

|

||||

# separate terms of service, privacy policy, and support

|

||||

# documentation.

|

||||

|

||||

# A sample workflow which checks out the code, builds a container

|

||||

# image using Docker and scans that image for vulnerabilities using

|

||||

# Snyk. The results are then uploaded to GitHub Security Code Scanning

|

||||

#

|

||||

# For more examples, including how to limit scans to only high-severity

|

||||

# issues, monitor images for newly disclosed vulnerabilities in Snyk and

|

||||

# fail PR checks for new vulnerabilities, see https://github.com/snyk/actions/

|

||||

|

||||

name: Build

|

||||

on:

|

||||

push:

|

||||

branches: [ main ]

|

||||

pull_request:

|

||||

# The branches below must be a subset of the branches above

|

||||

branches: [ main ]

|

||||

|

||||

jobs:

|

||||

Run:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Build & Deploy

|

||||

run: make setup && make up

|

||||

- name: Test Elasticsearch

|

||||

run: timeout 240s sh -c "until curl https://elastic:changeme@localhost:9200 --insecure --silent; do echo 'Elasticsearch Not Up, Retrying...'; sleep 3; done" && echo 'Elasticsearch is up'

|

||||

- name: Test Kibana

|

||||

run: timeout 240s sh -c "until curl https://localhost:5601 --insecure --silent -I; do echo 'Kibana Not Ready, Retrying...'; sleep 3; done" && echo 'Kibana is up'

|

||||

|

||||

4

.gitignore

vendored

Normal file

4

.gitignore

vendored

Normal file

@@ -0,0 +1,4 @@

|

||||

.idea/

|

||||

.DS_Store

|

||||

/secrets

|

||||

tools/elastalert/rules/*

|

||||

21

LICENSE

Normal file

21

LICENSE

Normal file

@@ -0,0 +1,21 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2022 Sherif Abdel-Naby

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

82

Makefile

vendored

Normal file

82

Makefile

vendored

Normal file

@@ -0,0 +1,82 @@

|

||||

.DEFAULT_GOAL:=help

|

||||

|

||||

COMPOSE_ALL_FILES := -f docker-compose.yml -f docker-compose.monitor.yml -f docker-compose.nodes.yml -f docker-compose.logs.yml

|

||||

COMPOSE_MONITORING := -f docker-compose.yml -f docker-compose.monitor.yml

|

||||

COMPOSE_LOGGING := -f docker-compose.yml -f docker-compose.logs.yml

|

||||

COMPOSE_NODES := -f docker-compose.yml -f docker-compose.nodes.yml

|

||||

ELK_SERVICES := elasticsearch logstash kibana apm-server

|

||||

ELK_LOG_COLLECTION := filebeat

|

||||

ELK_MONITORING := elasticsearch-exporter logstash-exporter filebeat-cluster-logs

|

||||

ELK_NODES := elasticsearch-1 elasticsearch-2

|

||||

ELK_MAIN_SERVICES := ${ELK_SERVICES} ${ELK_MONITORING}

|

||||

ELK_ALL_SERVICES := ${ELK_MAIN_SERVICES} ${ELK_NODES} ${ELK_LOG_COLLECTION}

|

||||

|

||||

compose_v2_not_supported = $(shell command docker compose 2> /dev/null)

|

||||

ifeq (,$(compose_v2_not_supported))

|

||||

DOCKER_COMPOSE_COMMAND = docker-compose

|

||||

else

|

||||

DOCKER_COMPOSE_COMMAND = docker compose

|

||||

endif

|

||||

|

||||

# --------------------------

|

||||

.PHONY: setup keystore certs all elk monitoring build down stop restart rm logs

|

||||

|

||||

keystore: ## Setup Elasticsearch Keystore, by initializing passwords, and add credentials defined in `keystore.sh`.

|

||||

$(DOCKER_COMPOSE_COMMAND) -f docker-compose.setup.yml run --rm keystore

|

||||

|

||||

certs: ## Generate Elasticsearch SSL Certs.

|

||||

$(DOCKER_COMPOSE_COMMAND) -f docker-compose.setup.yml run --rm certs

|

||||

|

||||

setup: ## Generate Elasticsearch SSL Certs and Keystore.

|

||||

@make certs

|

||||

@make keystore

|

||||

|

||||

all: ## Start Elk and all its component (ELK, Monitoring, and Tools).

|

||||

$(DOCKER_COMPOSE_COMMAND) ${COMPOSE_ALL_FILES} up -d --build ${ELK_MAIN_SERVICES}

|

||||

|

||||

elk: ## Start ELK.

|

||||

$(DOCKER_COMPOSE_COMMAND) up -d --build

|

||||

|

||||

up:

|

||||

@make elk

|

||||

@echo "Visit Kibana: https://localhost:5601 (user: elastic, password: changeme) [Unless you changed values in .env]"

|

||||

|

||||

monitoring: ## Start ELK Monitoring.

|

||||

$(DOCKER_COMPOSE_COMMAND) ${COMPOSE_MONITORING} up -d --build ${ELK_MONITORING}

|

||||

|

||||

collect-docker-logs: ## Start Filebeat that collects all Host Docker Logs and ship it to ELK

|

||||

$(DOCKER_COMPOSE_COMMAND) ${COMPOSE_LOGGING} up -d --build ${ELK_LOG_COLLECTION}

|

||||

|

||||

nodes: ## Start Two Extra Elasticsearch Nodes

|

||||

$(DOCKER_COMPOSE_COMMAND) ${COMPOSE_NODES} up -d --build ${ELK_NODES}

|

||||

|

||||

build: ## Build ELK and all its extra components.

|

||||

$(DOCKER_COMPOSE_COMMAND) ${COMPOSE_ALL_FILES} build ${ELK_ALL_SERVICES}

|

||||

ps: ## Show all running containers.

|

||||

$(DOCKER_COMPOSE_COMMAND) ${COMPOSE_ALL_FILES} ps

|

||||

|

||||

down: ## Down ELK and all its extra components.

|

||||

$(DOCKER_COMPOSE_COMMAND) ${COMPOSE_ALL_FILES} down

|

||||

|

||||

stop: ## Stop ELK and all its extra components.

|

||||

$(DOCKER_COMPOSE_COMMAND) ${COMPOSE_ALL_FILES} stop ${ELK_ALL_SERVICES}

|

||||

|

||||

restart: ## Restart ELK and all its extra components.

|

||||

$(DOCKER_COMPOSE_COMMAND) ${COMPOSE_ALL_FILES} restart ${ELK_ALL_SERVICES}

|

||||

|

||||

rm: ## Remove ELK and all its extra components containers.

|

||||

$(DOCKER_COMPOSE_COMMAND) $(COMPOSE_ALL_FILES) rm -f ${ELK_ALL_SERVICES}

|

||||

|

||||

logs: ## Tail all logs with -n 1000.

|

||||

$(DOCKER_COMPOSE_COMMAND) $(COMPOSE_ALL_FILES) logs --follow --tail=1000 ${ELK_ALL_SERVICES}

|

||||

|

||||

images: ## Show all Images of ELK and all its extra components.

|

||||

$(DOCKER_COMPOSE_COMMAND) $(COMPOSE_ALL_FILES) images ${ELK_ALL_SERVICES}

|

||||

|

||||

prune: ## Remove ELK Containers and Delete ELK-related Volume Data (the elastic_elasticsearch-data volume)

|

||||

@make stop && make rm

|

||||

@docker volume prune -f --filter label=com.docker.compose.project=elastic

|

||||

|

||||

help: ## Show this help.

|

||||

@echo "Make Application Docker Images and Containers using Docker-Compose files in 'docker' Dir."

|

||||

@awk 'BEGIN {FS = ":.*##"; printf "\nUsage:\n make \033[36m<target>\033[0m (default: help)\n\nTargets:\n"} /^[a-zA-Z_-]+:.*?##/ { printf " \033[36m%-12s\033[0m %s\n", $$1, $$2 }' $(MAKEFILE_LIST)

|

||||

271

README.md

Normal file

271

README.md

Normal file

@@ -0,0 +1,271 @@

|

||||

<p align="center">

|

||||

<img width="500px" src="https://user-images.githubusercontent.com/16992394/147855783-07b747f3-d033-476f-9e06-96a4a88a54c6.png">

|

||||

</p>

|

||||

<h2 align="center"><b>Elast</b>ic Stack on <b>Docker</b></h2>

|

||||

<h3 align="center">Preconfigured Security, Tools, and Self-Monitoring</h3>

|

||||

<h4 align="center">Configured to be ready to be used for Log, Metrics, APM, Alerting, Machine Learning, and Security (SIEM) usecases.</h4>

|

||||

<p align="center">

|

||||

<a>

|

||||

<img src="https://img.shields.io/badge/Elastic%20Stack-8.10.2-blue?style=flat&logo=elasticsearch" alt="Elastic Stack Version 7^^">

|

||||

</a>

|

||||

<a>

|

||||

<img src="https://img.shields.io/github/v/tag/sherifabdlnaby/elastdocker?label=release&sort=semver">

|

||||

</a>

|

||||

<a href="https://github.com/sherifabdlnaby/elastdocker/actions/workflows/build.yml">

|

||||

<img src="https://github.com/sherifabdlnaby/elastdocker/actions/workflows/build.yml/badge.svg">

|

||||

</a>

|

||||

<a>

|

||||

<img src="https://img.shields.io/badge/Log4Shell-mitigated-brightgreen?style=flat&logo=java">

|

||||

</a>

|

||||

<a>

|

||||

<img src="https://img.shields.io/badge/contributions-welcome-brightgreen.svg?style=flat" alt="contributions welcome">

|

||||

</a>

|

||||

<a href="https://github.com/sherifabdlnaby/elastdocker/network">

|

||||

<img src="https://img.shields.io/github/forks/sherifabdlnaby/elastdocker.svg" alt="GitHub forks">

|

||||

</a>

|

||||

<a href="https://github.com/sherifabdlnaby/elastdocker/issues">

|

||||

<img src="https://img.shields.io/github/issues/sherifabdlnaby/elastdocker.svg" alt="GitHub issues">

|

||||

</a>

|

||||

<a href="https://raw.githubusercontent.com/sherifabdlnaby/elastdocker/blob/master/LICENSE">

|

||||

<img src="https://img.shields.io/badge/license-MIT-blue.svg" alt="GitHub license">

|

||||

</a>

|

||||

</p>

|

||||

|

||||

# Introduction

|

||||

Elastic Stack (**ELK**) Docker Composition, preconfigured with **Security**, **Monitoring**, and **Tools**; Up with a Single Command.

|

||||

|

||||

Suitable for Demoing, MVPs and small production deployments.

|

||||

|

||||

Stack Version: [8.10.2](https://www.elastic.co/blog/whats-new-elastic-8-10-0) 🎉 - Based on [Official Elastic Docker Images](https://www.docker.elastic.co/)

|

||||

> You can change Elastic Stack version by setting `ELK_VERSION` in `.env` file and rebuild your images. Any version >= 8.0.0 is compatible with this template.

|

||||

|

||||

### Main Features 📜

|

||||

|

||||

- Configured as a Production Single Node Cluster. (With a multi-node cluster option for experimenting).

|

||||

- Security Enabled By Default.

|

||||

- Configured to Enable:

|

||||

- Logging & Metrics Ingestion

|

||||

- Option to collect logs of all Docker Containers running on the host. via `make collect-docker-logs`.

|

||||

- APM

|

||||

- Alerting

|

||||

- Machine Learning

|

||||

- Anomaly Detection

|

||||

- SIEM (Security information and event management).

|

||||

- Enabling Trial License

|

||||

- Use Docker-Compose and `.env` to configure your entire stack parameters.

|

||||

- Persist Elasticsearch's Keystore and SSL Certifications.

|

||||

- Self-Monitoring Metrics Enabled.

|

||||

- Prometheus Exporters for Stack Metrics.

|

||||

- Embedded Container Healthchecks for Stack Images.

|

||||

|

||||

#### More points

|

||||

And comparing Elastdocker and the popular [deviantony/docker-elk](https://github.com/deviantony/docker-elk)

|

||||

|

||||

<details><summary>Expand...</summary>

|

||||

<p>

|

||||

|

||||

One of the most popular ELK on Docker repositories is the awesome [deviantony/docker-elk](https://github.com/deviantony/docker-elk).

|

||||

Elastdocker differs from `deviantony/docker-elk` in the following points.

|

||||

|

||||

- Security enabled by default using Basic license, not Trial.

|

||||

|

||||

- Persisting data by default in a volume.

|

||||

|

||||

- Run in Production Mode (by enabling SSL on Transport Layer, and add initial master node settings).

|

||||

|

||||

- Persisting Generated Keystore, and create an extendable script that makes it easier to recreate it every-time the container is created.

|

||||

|

||||

- Parameterize credentials in .env instead of hardcoding `elastich:changeme` in every component config.

|

||||

|

||||

- Parameterize all other Config like Heap Size.

|

||||

|

||||

- Add recommended environment configurations as Ulimits and Swap disable to the docker-compose.

|

||||

|

||||

- Make it ready to be extended into a multinode cluster.

|

||||

|

||||

- Configuring the Self-Monitoring and the Filebeat agent that ship ELK logs to ELK itself. (as a step to shipping it to a monitoring cluster in the future).

|

||||

|

||||

- Configured Prometheus Exporters.

|

||||

|

||||

- The Makefile that simplifies everything into some simple commands.

|

||||

|

||||

</p>

|

||||

</details>

|

||||

|

||||

-----

|

||||

|

||||

# Requirements

|

||||

|

||||

- [Docker 20.05 or higher](https://docs.docker.com/install/)

|

||||

- [Docker-Compose 1.29 or higher](https://docs.docker.com/compose/install/)

|

||||

- 4GB RAM (For Windows and MacOS make sure Docker's VM has more than 4GB+ memory.)

|

||||

|

||||

# Setup

|

||||

|

||||

1. Clone the Repository

|

||||

```bash

|

||||

git clone https://github.com/sherifabdlnaby/elastdocker.git

|

||||

```

|

||||

2. Initialize Elasticsearch Keystore and TLS Self-Signed Certificates

|

||||

```bash

|

||||

$ make setup

|

||||

```

|

||||

> **For Linux's docker hosts only**. By default virtual memory [is not enough](https://www.elastic.co/guide/en/elasticsearch/reference/current/vm-max-map-count.html) so run the next command as root `sysctl -w vm.max_map_count=262144`

|

||||

3. Start Elastic Stack

|

||||

```bash

|

||||

$ make elk <OR> $ docker-compose up -d <OR> $ docker compose up -d

|

||||

```

|

||||

4. Visit Kibana at [https://localhost:5601](https://localhost:5601) or `https://<your_public_ip>:5601`

|

||||

|

||||

Default Username: `elastic`, Password: `changeme`

|

||||

|

||||

> - Notice that Kibana is configured to use HTTPS, so you'll need to write `https://` before `localhost:5601` in the browser.

|

||||

> - Modify `.env` file for your needs, most importantly `ELASTIC_PASSWORD` that setup your superuser `elastic`'s password, `ELASTICSEARCH_HEAP` & `LOGSTASH_HEAP` for Elasticsearch & Logstash Heap Size.

|

||||

|

||||

> Whatever your Host (e.g AWS EC2, Azure, DigitalOcean, or on-premise server), once you expose your host to the network, ELK component will be accessible on their respective ports. Since the enabled TLS uses a self-signed certificate, it is recommended to SSL-Terminate public traffic using your signed certificates.

|

||||

|

||||

> 🏃🏻♂️ To start ingesting logs, you can start by running `make collect-docker-logs` which will collect your host's container logs.

|

||||

|

||||

## Additional Commands

|

||||

|

||||

<details><summary>Expand</summary>

|

||||

<p>

|

||||

|

||||

#### To Start Monitoring and Prometheus Exporters

|

||||

```shell

|

||||

$ make monitoring

|

||||

```

|

||||

#### To Ship Docker Container Logs to ELK

|

||||

```shell

|

||||

$ make collect-docker-logs

|

||||

```

|

||||

#### To Start **Elastic Stack, Tools and Monitoring**

|

||||

```

|

||||

$ make all

|

||||

```

|

||||

#### To Start 2 Extra Elasticsearch nodes (recommended for experimenting only)

|

||||

```shell

|

||||

$ make nodes

|

||||

```

|

||||

#### To Rebuild Images

|

||||

```shell

|

||||

$ make build

|

||||

```

|

||||

#### Bring down the stack.

|

||||

```shell

|

||||

$ make down

|

||||

```

|

||||

|

||||

#### Reset everything, Remove all containers, and delete **DATA**!

|

||||

```shell

|

||||

$ make prune

|

||||

```

|

||||

|

||||

</p>

|

||||

</details>

|

||||

|

||||

# Configuration

|

||||

|

||||

* Some Configuration are parameterized in the `.env` file.

|

||||

* `ELASTIC_PASSWORD`, user `elastic`'s password (default: `changeme` _pls_).

|

||||

* `ELK_VERSION` Elastic Stack Version (default: `8.10.2`)

|

||||

* `ELASTICSEARCH_HEAP`, how much Elasticsearch allocate from memory (default: 1GB -good for development only-)

|

||||

* `LOGSTASH_HEAP`, how much Logstash allocate from memory.

|

||||

* Other configurations which their such as cluster name, and node name, etc.

|

||||

* Elasticsearch Configuration in `elasticsearch.yml` at `./elasticsearch/config`.

|

||||

* Logstash Configuration in `logstash.yml` at `./logstash/config/logstash.yml`.

|

||||

* Logstash Pipeline in `main.conf` at `./logstash/pipeline/main.conf`.

|

||||

* Kibana Configuration in `kibana.yml` at `./kibana/config`.

|

||||

|

||||

### Setting Up Keystore

|

||||

|

||||

You can extend the Keystore generation script by adding keys to `./setup/keystore.sh` script. (e.g Add S3 Snapshot Repository Credentials)

|

||||

|

||||

To Re-generate Keystore:

|

||||

```

|

||||

make keystore

|

||||

```

|

||||

|

||||

### Notes

|

||||

|

||||

|

||||

- ⚠️ Elasticsearch HTTP layer is using SSL, thus mean you need to configure your elasticsearch clients with the `CA` in `secrets/certs/ca/ca.crt`, or configure client to ignore SSL Certificate Verification (e.g `--insecure` in `curl`).

|

||||

|

||||

- Adding Two Extra Nodes to the cluster will make the cluster depending on them and won't start without them again.

|

||||

|

||||

- Makefile is a wrapper around `Docker-Compose` commands, use `make help` to know every command.

|

||||

|

||||

- Elasticsearch will save its data to a volume named `elasticsearch-data`

|

||||

|

||||

- Elasticsearch Keystore (that contains passwords and credentials) and SSL Certificate are generated in the `./secrets` directory by the setup command.

|

||||

|

||||

- Make sure to run `make setup` if you changed `ELASTIC_PASSWORD` and to restart the stack afterwards.

|

||||

|

||||

- For Linux Users it's recommended to set the following configuration (run as `root`)

|

||||

```

|

||||

sysctl -w vm.max_map_count=262144

|

||||

```

|

||||

By default, Virtual Memory [is not enough](https://www.elastic.co/guide/en/elasticsearch/reference/current/vm-max-map-count.html).

|

||||

|

||||

---------------------------

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

# Working with Elastic APM

|

||||

|

||||

After completing the setup step, you will notice a container named apm-server which gives you deeper visibility into your applications and can help you to identify and resolve root cause issues with correlated traces, logs, and metrics.

|

||||

|

||||

## Authenticating with Elastic APM

|

||||

|

||||

In order to authenticate with Elastic APM, you will need the following:

|

||||

|

||||

- The value of `ELASTIC_APM_SECRET_TOKEN` defined in `.env` file as we have [secret token](https://www.elastic.co/guide/en/apm/guide/master/secret-token.html) enabled by default

|

||||

- The ability to reach port `8200`

|

||||

- Install elastic apm client in your application e.g. for NodeJS based applications you need to install [elastic-apm-node](https://www.elastic.co/guide/en/apm/agent/nodejs/master/typescript.html)

|

||||

- Import the package in your application and call the start function, In case of NodeJS based application you can do the following:

|

||||

|

||||

```

|

||||

const apm = require('elastic-apm-node').start({

|

||||

serviceName: 'foobar',

|

||||

secretToken: process.env.ELASTIC_APM_SECRET_TOKEN,

|

||||

|

||||

// https is enabled by default as per elastdocker configuration

|

||||

serverUrl: 'https://localhost:8200',

|

||||

})

|

||||

```

|

||||

> Make sure that the agent is started before you require any other modules in your Node.js application - i.e. before express, http, etc. as mentioned in [Elastic APM Agent - NodeJS initialization](https://www.elastic.co/guide/en/apm/agent/nodejs/master/express.html#express-initialization)

|

||||

|

||||

For more details or other languages you can check the following:

|

||||

- [APM Agents in different languages](https://www.elastic.co/guide/en/apm/agent/index.html)

|

||||

|

||||

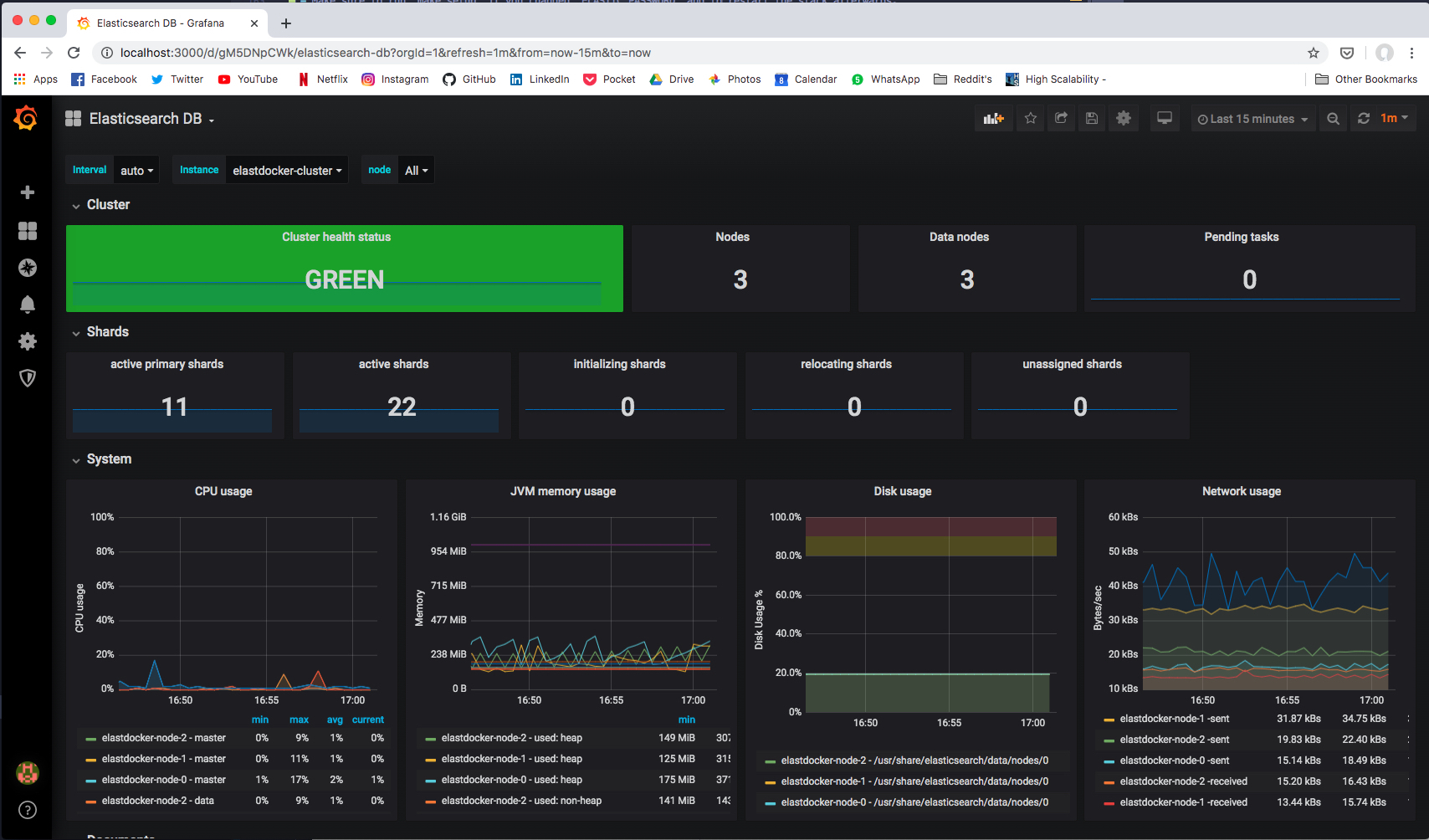

# Monitoring The Cluster

|

||||

|

||||

### Via Self-Monitoring

|

||||

|

||||

Head to Stack Monitoring tab in Kibana to see cluster metrics for all stack components.

|

||||

|

||||

|

||||

|

||||

|

||||

> In Production, cluster metrics should be shipped to another dedicated monitoring cluster.

|

||||

|

||||

### Via Prometheus Exporters

|

||||

If you started Prometheus Exporters using `make monitoring` command. Prometheus Exporters will expose metrics at the following ports.

|

||||

|

||||

| **Prometheus Exporter** | **Port** | **Recommended Grafana Dashboard** |

|

||||

|-------------------------- |---------- |------------------------------------------------ |

|

||||

| `elasticsearch-exporter` | `9114` | [Elasticsearch by Kristian Jensen](https://grafana.com/grafana/dashboards/4358) |

|

||||

| `logstash-exporter` | `9304` | [logstash-monitoring by dpavlos](https://github.com/dpavlos/logstash-monitoring) |

|

||||

|

||||

|

||||

|

||||

# License

|

||||

[MIT License](https://raw.githubusercontent.com/sherifabdlnaby/elastdocker/master/LICENSE)

|

||||

Copyright (c) 2022 Sherif Abdel-Naby

|

||||

|

||||

# Contribution

|

||||

|

||||

PR(s) are Open and Welcomed.

|

||||

5

apm-server/Dockerfile

Normal file

5

apm-server/Dockerfile

Normal file

@@ -0,0 +1,5 @@

|

||||

ARG ELK_VERSION

|

||||

|

||||

# https://github.com/elastic/apm-server

|

||||

FROM docker.elastic.co/apm/apm-server:${ELK_VERSION}

|

||||

ARG ELK_VERSION

|

||||

101

apm-server/config/apm-server.yml

Normal file

101

apm-server/config/apm-server.yml

Normal file

@@ -0,0 +1,101 @@

|

||||

######################### APM Server Configuration #########################

|

||||

|

||||

################################ APM Server ################################

|

||||

|

||||

apm-server:

|

||||

# Defines the host and port the server is listening on. Use "unix:/path/to.sock" to listen on a unix domain socket.

|

||||

host: "0.0.0.0:8200"

|

||||

|

||||

|

||||

#---------------------------- APM Server - Secure Communication with Agents ----------------------------

|

||||

|

||||

# Enable authentication using Secret token

|

||||

auth:

|

||||

secret_token: '${ELASTIC_APM_SECRET_TOKEN}'

|

||||

|

||||

# Enable secure communication between APM agents and the server. By default ssl is disabled.

|

||||

ssl:

|

||||

enabled: true

|

||||

|

||||

# Path to file containing the certificate for server authentication.

|

||||

# Needs to be configured when ssl is enabled.

|

||||

certificate: "/certs/apm-server.crt"

|

||||

|

||||

# Path to file containing server certificate key.

|

||||

# Needs to be configured when ssl is enabled.

|

||||

key: "/certs/apm-server.key"

|

||||

|

||||

#================================ Outputs =================================

|

||||

|

||||

# Configure the output to use when sending the data collected by apm-server.

|

||||

|

||||

#-------------------------- Elasticsearch output --------------------------

|

||||

output.elasticsearch:

|

||||

# Array of hosts to connect to.

|

||||

# Scheme and port can be left out and will be set to the default (`http` and `9200`).

|

||||

# In case you specify and additional path, the scheme is required: `http://elasticsearch:9200/path`.

|

||||

# IPv6 addresses should always be defined as: `https://[2001:db8::1]:9200`.

|

||||

hosts: '${ELASTICSEARCH_HOST_PORT}'

|

||||

|

||||

# Boolean flag to enable or disable the output module.

|

||||

enabled: true

|

||||

|

||||

# Protocol - either `http` (default) or `https`.

|

||||

protocol: "https"

|

||||

|

||||

# Authentication credentials

|

||||

username: '${ELASTIC_USERNAME}'

|

||||

password: '${ELASTIC_PASSWORD}'

|

||||

|

||||

# Enable custom SSL settings. Set to false to ignore custom SSL settings for secure communication.

|

||||

ssl.enabled: true

|

||||

|

||||

# List of root certificates for HTTPS server verifications.

|

||||

ssl.certificate_authorities: ["/certs/ca.crt"]

|

||||

|

||||

# Certificate for SSL client authentication.

|

||||

ssl.certificate: "/certs/apm-server.crt"

|

||||

|

||||

# Client Certificate Key

|

||||

ssl.key: "/certs/apm-server.key"

|

||||

|

||||

#============================= X-pack Monitoring =============================

|

||||

|

||||

# APM server can export internal metrics to a central Elasticsearch monitoring

|

||||

# cluster. This requires x-pack monitoring to be enabled in Elasticsearch. The

|

||||

# reporting is disabled by default.

|

||||

|

||||

# Set to true to enable the monitoring reporter.

|

||||

monitoring.enabled: true

|

||||

|

||||

# Most settings from the Elasticsearch output are accepted here as well.

|

||||

# Note that these settings should be configured to point to your Elasticsearch *monitoring* cluster.

|

||||

# Any setting that is not set is automatically inherited from the Elasticsearch

|

||||

# output configuration. This means that if you have the Elasticsearch output configured,

|

||||

# you can simply uncomment the following line.

|

||||

monitoring.elasticsearch:

|

||||

|

||||

# Protocol - either `http` (default) or `https`.

|

||||

protocol: "https"

|

||||

|

||||

# Authentication credentials

|

||||

username: '${ELASTIC_USERNAME}'

|

||||

password: '${ELASTIC_PASSWORD}'

|

||||

|

||||

# Array of hosts to connect to.

|

||||

# Scheme and port can be left out and will be set to the default (`http` and `9200`).

|

||||

# In case you specify and additional path, the scheme is required: `http://elasticsearch:9200/path`.

|

||||

# IPv6 addresses should always be defined as: `https://[2001:db8::1]:9200`.

|

||||

hosts: '${ELASTICSEARCH_HOST_PORT}'

|

||||

|

||||

# Enable custom SSL settings. Set to false to ignore custom SSL settings for secure communication.

|

||||

ssl.enabled: true

|

||||

|

||||

# List of root certificates for HTTPS server verifications.

|

||||

ssl.certificate_authorities: ["/certs/ca.crt"]

|

||||

|

||||

# Certificate for SSL client authentication.

|

||||

ssl.certificate: "/certs/apm-server.crt"

|

||||

|

||||

# Client Certificate Key

|

||||

ssl.key: "/certs/apm-server.key"

|

||||

24

docker-compose.logs.yml

Normal file

24

docker-compose.logs.yml

Normal file

@@ -0,0 +1,24 @@

|

||||

version: '3.5'

|

||||

|

||||

# will contain all elasticsearch data.

|

||||

volumes:

|

||||

filebeat-data:

|

||||

|

||||

services:

|

||||

# Docker Logs Shipper ------------------------------

|

||||

filebeat:

|

||||

image: docker.elastic.co/beats/filebeat:${ELK_VERSION}

|

||||

restart: always

|

||||

# -e flag to log to stderr and disable syslog/file output

|

||||

command: -e --strict.perms=false

|

||||

user: root

|

||||

environment:

|

||||

ELASTIC_USERNAME: ${ELASTIC_USERNAME}

|

||||

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD}

|

||||

KIBANA_HOST_PORT: ${KIBANA_HOST}:${KIBANA_PORT}

|

||||

ELASTICSEARCH_HOST_PORT: https://${ELASTICSEARCH_HOST}:${ELASTICSEARCH_PORT}

|

||||

volumes:

|

||||

- ./filebeat/filebeat.docker.logs.yml:/usr/share/filebeat/filebeat.yml:ro

|

||||

- /var/lib/docker/containers:/var/lib/docker/containers:ro

|

||||

- /var/run/docker.sock:/var/run/docker.sock:ro

|

||||

- filebeat-data:/var/lib/filebeat/data

|

||||

39

docker-compose.monitor.yml

Normal file

39

docker-compose.monitor.yml

Normal file

@@ -0,0 +1,39 @@

|

||||

version: '3.5'

|

||||

|

||||

services:

|

||||

|

||||

# Prometheus Exporters ------------------------------

|

||||

elasticsearch-exporter:

|

||||

image: justwatch/elasticsearch_exporter:1.1.0

|

||||

restart: always

|

||||

command: ["--es.uri", "https://${ELASTIC_USERNAME}:${ELASTIC_PASSWORD}@${ELASTICSEARCH_HOST}:${ELASTICSEARCH_PORT}",

|

||||

"--es.ssl-skip-verify",

|

||||

"--es.all",

|

||||

"--es.snapshots",

|

||||

"--es.indices"]

|

||||

ports:

|

||||

- "9114:9114"

|

||||

|

||||

logstash-exporter:

|

||||

image: alxrem/prometheus-logstash-exporter

|

||||

restart: always

|

||||

ports:

|

||||

- "9304:9304"

|

||||

command: ["-logstash.host", "${LOGSTASH_HOST}"]

|

||||

|

||||

# Cluster Logs Shipper ------------------------------

|

||||

filebeat-cluster-logs:

|

||||

image: docker.elastic.co/beats/filebeat:${ELK_VERSION}

|

||||

restart: always

|

||||

# -e flag to log to stderr and disable syslog/file output

|

||||

command: -e --strict.perms=false

|

||||

user: root

|

||||

environment:

|

||||

ELASTIC_USERNAME: ${ELASTIC_USERNAME}

|

||||

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD}

|

||||

KIBANA_HOST_PORT: ${KIBANA_HOST}:${KIBANA_PORT}

|

||||

ELASTICSEARCH_HOST_PORT: https://${ELASTICSEARCH_HOST}:${ELASTICSEARCH_PORT}

|

||||

volumes:

|

||||

- ./filebeat/filebeat.monitoring.yml:/usr/share/filebeat/filebeat.yml:ro

|

||||

- /var/lib/docker/containers:/var/lib/docker/containers:ro

|

||||

- /var/run/docker.sock:/var/run/docker.sock:ro

|

||||

82

docker-compose.nodes.yml

Normal file

82

docker-compose.nodes.yml

Normal file

@@ -0,0 +1,82 @@

|

||||

version: '3.5'

|

||||

|

||||

# will contain all elasticsearch data.

|

||||

volumes:

|

||||

elasticsearch-data-1:

|

||||

elasticsearch-data-2:

|

||||

|

||||

services:

|

||||

elasticsearch-1:

|

||||

image: elastdocker/elasticsearch:${ELK_VERSION}

|

||||

build:

|

||||

context: elasticsearch/

|

||||

args:

|

||||

ELK_VERSION: ${ELK_VERSION}

|

||||

restart: unless-stopped

|

||||

environment:

|

||||

ELASTIC_USERNAME: ${ELASTIC_USERNAME}

|

||||

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD}

|

||||

ELASTIC_CLUSTER_NAME: ${ELASTIC_CLUSTER_NAME}

|

||||

ELASTIC_NODE_NAME: ${ELASTIC_NODE_NAME_1}

|

||||

ELASTIC_INIT_MASTER_NODE: ${ELASTIC_INIT_MASTER_NODE}

|

||||

ELASTIC_DISCOVERY_SEEDS: ${ELASTIC_DISCOVERY_SEEDS}

|

||||

ELASTICSEARCH_PORT: ${ELASTICSEARCH_PORT}

|

||||

ES_JAVA_OPTS: -Xmx${ELASTICSEARCH_HEAP} -Xms${ELASTICSEARCH_HEAP} -Des.enforce.bootstrap.checks=true

|

||||

bootstrap.memory_lock: "true"

|

||||

volumes:

|

||||

- elasticsearch-data-1:/usr/share/elasticsearch/data

|

||||

- ./elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

|

||||

- ./elasticsearch/config/log4j2.properties:/usr/share/elasticsearch/config/log4j2.properties

|

||||

secrets:

|

||||

- source: elasticsearch.keystore

|

||||

target: /usr/share/elasticsearch/config/elasticsearch.keystore

|

||||

- source: elastic.ca

|

||||

target: /usr/share/elasticsearch/config/certs/ca.crt

|

||||

- source: elasticsearch.certificate

|

||||

target: /usr/share/elasticsearch/config/certs/elasticsearch.crt

|

||||

- source: elasticsearch.key

|

||||

target: /usr/share/elasticsearch/config/certs/elasticsearch.key

|

||||

ulimits:

|

||||

memlock:

|

||||

soft: -1

|

||||

hard: -1

|

||||

nofile:

|

||||

soft: 200000

|

||||

hard: 200000

|

||||

elasticsearch-2:

|

||||

image: elastdocker/elasticsearch:${ELK_VERSION}

|

||||

build:

|

||||

context: elasticsearch/

|

||||

args:

|

||||

ELK_VERSION: ${ELK_VERSION}

|

||||

restart: unless-stopped

|

||||

environment:

|

||||

ELASTIC_USERNAME: ${ELASTIC_USERNAME}

|

||||

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD}

|

||||

ELASTIC_CLUSTER_NAME: ${ELASTIC_CLUSTER_NAME}

|

||||

ELASTIC_NODE_NAME: ${ELASTIC_NODE_NAME_2}

|

||||

ELASTIC_INIT_MASTER_NODE: ${ELASTIC_INIT_MASTER_NODE}

|

||||

ELASTIC_DISCOVERY_SEEDS: ${ELASTIC_DISCOVERY_SEEDS}

|

||||

ELASTICSEARCH_PORT: ${ELASTICSEARCH_PORT}

|

||||

ES_JAVA_OPTS: -Xmx${ELASTICSEARCH_HEAP} -Xms${ELASTICSEARCH_HEAP} -Des.enforce.bootstrap.checks=true

|

||||

bootstrap.memory_lock: "true"

|

||||

volumes:

|

||||

- elasticsearch-data-2:/usr/share/elasticsearch/data

|

||||

- ./elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

|

||||

- ./elasticsearch/config/log4j2.properties:/usr/share/elasticsearch/config/log4j2.properties

|

||||

secrets:

|

||||

- source: elasticsearch.keystore

|

||||

target: /usr/share/elasticsearch/config/elasticsearch.keystore

|

||||

- source: elastic.ca

|

||||

target: /usr/share/elasticsearch/config/certs/ca.crt

|

||||

- source: elasticsearch.certificate

|

||||

target: /usr/share/elasticsearch/config/certs/elasticsearch.crt

|

||||

- source: elasticsearch.key

|

||||

target: /usr/share/elasticsearch/config/certs/elasticsearch.key

|

||||

ulimits:

|

||||

memlock:

|

||||

soft: -1

|

||||

hard: -1

|

||||

nofile:

|

||||

soft: 200000

|

||||

hard: 200000

|

||||

28

docker-compose.setup.yml

Normal file

28

docker-compose.setup.yml

Normal file

@@ -0,0 +1,28 @@

|

||||

version: '3.5'

|

||||

|

||||

services:

|

||||

keystore:

|

||||

image: elastdocker/elasticsearch:${ELK_VERSION}

|

||||

build:

|

||||

context: elasticsearch/

|

||||

args:

|

||||

ELK_VERSION: ${ELK_VERSION}

|

||||

command: bash /setup/setup-keystore.sh

|

||||

user: "0"

|

||||

volumes:

|

||||

- ./secrets:/secrets

|

||||

- ./setup/:/setup/

|

||||

environment:

|

||||

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD}

|

||||

|

||||

certs:

|

||||

image: elastdocker/elasticsearch:${ELK_VERSION}

|

||||

build:

|

||||

context: elasticsearch/

|

||||

args:

|

||||

ELK_VERSION: ${ELK_VERSION}

|

||||

command: bash /setup/setup-certs.sh

|

||||

user: "0"

|

||||

volumes:

|

||||

- ./secrets:/secrets

|

||||

- ./setup/:/setup

|

||||

152

docker-compose.yml

Normal file

152

docker-compose.yml

Normal file

@@ -0,0 +1,152 @@

|

||||

version: '3.5'

|

||||

|

||||

# To Join any other app setup using another network, change name and set external = true

|

||||

networks:

|

||||

default:

|

||||

name: elastic

|

||||

external: false

|

||||

|

||||

# will contain all elasticsearch data.

|

||||

volumes:

|

||||

elasticsearch-data:

|

||||

|

||||

secrets:

|

||||

elasticsearch.keystore:

|

||||

file: ./secrets/keystore/elasticsearch.keystore

|

||||

elasticsearch.service_tokens:

|

||||

file: ./secrets/service_tokens

|

||||

elastic.ca:

|

||||

file: ./secrets/certs/ca/ca.crt

|

||||

elasticsearch.certificate:

|

||||

file: ./secrets/certs/elasticsearch/elasticsearch.crt

|

||||

elasticsearch.key:

|

||||

file: ./secrets/certs/elasticsearch/elasticsearch.key

|

||||

kibana.certificate:

|

||||

file: ./secrets/certs/kibana/kibana.crt

|

||||

kibana.key:

|

||||

file: ./secrets/certs/kibana/kibana.key

|

||||

apm-server.certificate:

|

||||

file: ./secrets/certs/apm-server/apm-server.crt

|

||||

apm-server.key:

|

||||

file: ./secrets/certs/apm-server/apm-server.key

|

||||

|

||||

services:

|

||||

elasticsearch:

|

||||

image: elastdocker/elasticsearch:${ELK_VERSION}

|

||||

build:

|

||||

context: elasticsearch/

|

||||

args:

|

||||

ELK_VERSION: ${ELK_VERSION}

|

||||

restart: unless-stopped

|

||||

environment:

|

||||

ELASTIC_USERNAME: ${ELASTIC_USERNAME}

|

||||

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD}

|

||||

ELASTIC_CLUSTER_NAME: ${ELASTIC_CLUSTER_NAME}

|

||||

ELASTIC_NODE_NAME: ${ELASTIC_NODE_NAME}

|

||||

ELASTIC_INIT_MASTER_NODE: ${ELASTIC_INIT_MASTER_NODE}

|

||||

ELASTIC_DISCOVERY_SEEDS: ${ELASTIC_DISCOVERY_SEEDS}

|

||||

ELASTICSEARCH_PORT: ${ELASTICSEARCH_PORT}

|

||||

ES_JAVA_OPTS: "-Xmx${ELASTICSEARCH_HEAP} -Xms${ELASTICSEARCH_HEAP} -Des.enforce.bootstrap.checks=true -Dlog4j2.formatMsgNoLookups=true"

|

||||

bootstrap.memory_lock: "true"

|

||||

volumes:

|

||||

- elasticsearch-data:/usr/share/elasticsearch/data

|

||||

- ./elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

|

||||

- ./elasticsearch/config/log4j2.properties:/usr/share/elasticsearch/config/log4j2.properties

|

||||

secrets:

|

||||

- source: elasticsearch.keystore

|

||||

target: /usr/share/elasticsearch/config/elasticsearch.keystore

|

||||

- source: elasticsearch.service_tokens

|

||||

target: /usr/share/elasticsearch/config/service_tokens

|

||||

- source: elastic.ca

|

||||

target: /usr/share/elasticsearch/config/certs/ca.crt

|

||||

- source: elasticsearch.certificate

|

||||

target: /usr/share/elasticsearch/config/certs/elasticsearch.crt

|

||||

- source: elasticsearch.key

|

||||

target: /usr/share/elasticsearch/config/certs/elasticsearch.key

|

||||

ports:

|

||||

- "${ELASTICSEARCH_PORT}:${ELASTICSEARCH_PORT}"

|

||||

- "9300:9300"

|

||||

ulimits:

|

||||

memlock:

|

||||

soft: -1

|

||||

hard: -1

|

||||

nofile:

|

||||

soft: 200000

|

||||

hard: 200000

|

||||

healthcheck:

|

||||

test: ["CMD", "sh", "-c", "curl -sf --insecure https://$ELASTIC_USERNAME:$ELASTIC_PASSWORD@localhost:$ELASTICSEARCH_PORT/_cat/health | grep -ioE 'green|yellow' || echo 'not green/yellow cluster status'"]

|

||||

|

||||

logstash:

|

||||

image: elastdocker/logstash:${ELK_VERSION}

|

||||

build:

|

||||

context: logstash/

|

||||

args:

|

||||

ELK_VERSION: $ELK_VERSION

|

||||

restart: unless-stopped

|

||||

volumes:

|

||||

- ./logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml:ro

|

||||

- ./logstash/config/pipelines.yml:/usr/share/logstash/config/pipelines.yml:ro

|

||||

- ./logstash/pipeline:/usr/share/logstash/pipeline:ro

|

||||

secrets:

|

||||

- source: elastic.ca

|

||||

target: /certs/ca.crt

|

||||

environment:

|

||||

ELASTIC_USERNAME: ${ELASTIC_USERNAME}

|

||||

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD}

|

||||

ELASTICSEARCH_HOST_PORT: https://${ELASTICSEARCH_HOST}:${ELASTICSEARCH_PORT}

|

||||

LS_JAVA_OPTS: "-Xmx${LOGSTASH_HEAP} -Xms${LOGSTASH_HEAP} -Dlog4j2.formatMsgNoLookups=true"

|

||||

ports:

|

||||

- "5044:5044"

|

||||

- "9600:9600"

|

||||

healthcheck:

|

||||

test: ["CMD", "curl", "-s" ,"-XGET", "http://127.0.0.1:9600"]

|

||||

|

||||

kibana:

|

||||

image: elastdocker/kibana:${ELK_VERSION}

|

||||

build:

|

||||

context: kibana/

|

||||

args:

|

||||

ELK_VERSION: $ELK_VERSION

|

||||

restart: unless-stopped

|

||||

volumes:

|

||||

- ./kibana/config/:/usr/share/kibana/config:ro

|

||||

environment:

|

||||

ELASTIC_USERNAME: ${ELASTIC_USERNAME}

|

||||

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD}

|

||||

ELASTICSEARCH_HOST_PORT: https://${ELASTICSEARCH_HOST}:${ELASTICSEARCH_PORT}

|

||||

KIBANA_PORT: ${KIBANA_PORT}

|

||||

env_file:

|

||||

- ./secrets/.env.kibana.token

|

||||

secrets:

|

||||

- source: elastic.ca

|

||||

target: /certs/ca.crt

|

||||

- source: kibana.certificate

|

||||

target: /certs/kibana.crt

|

||||

- source: kibana.key

|

||||

target: /certs/kibana.key

|

||||

ports:

|

||||